Benefits of using NVMe over PCI Express Fabrics

Reduced communication overhead

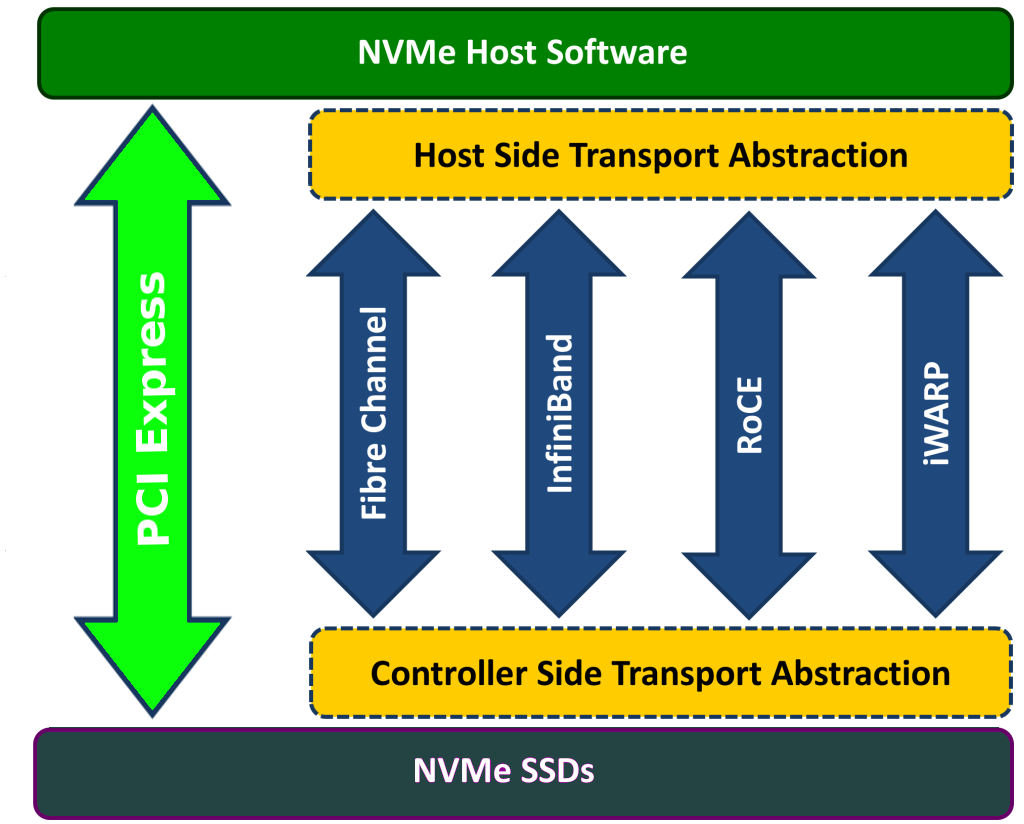

NVM Express (NVMe) over Fabrics defines a common architecture that supports a range of networking hardware (e.g. Infiniband, RoCE, iWARP) for a NVMe block storage protocol over a networking fabric.

NVMe devices have a direct PCIe interface. NVMe over Fabrics defines a software stacks to implement a transport abstraction layers at both sides of the fabric interfaces, to translate native PCIe transactions and disk operations over the fabric.

A PCIe fabric is different. Native PCIe transactions (TLPs) are forwarded automatically over the fabric with no protocol conversion. Standard PCIe NT technology is used to route the PCIe traffic from the host computer to the NVMe device. Device Interrupts are also automatically routed through the PCIe fabric. The image below illustrates the difference between using a PCIe Fabric and other Fabrics. PCIe Fabrics eliminate the transport abstraction, thus providing a much lower latency. It also still supports features such as RDMA.

SmartIO Dynamic Device Lending

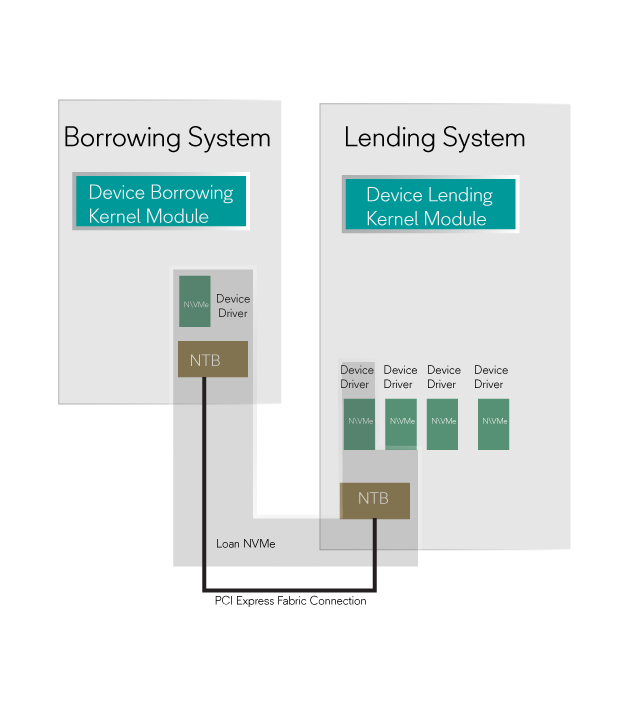

PCIe NTB switches are used to form the PCIe Fabric. PCIe transactions can automatically be routed through the PCIe fabric but software is needed to set up the routing between the systems and devices. Dolphin's eXpressWare includes software that easily enables customers to create an efficient and resilient PCIe Fabric.

Dolphin's PCIe SmartIO Device Lending software will use some resources to establish and remove the remote access but is not actively involved in the device to system communication. Devices such as NVMe drivers can be borrowed from various systems in a PCIe Fabric.

Comparison to other NVMe over Fabrics solutions

The table below summarizes the differences between local NVMe access and NVMe over Fabrics alternatives.

| Technology | Local PCIe | NVMe over PCIe Fabrics | NVMe over RDMA Fabrics |

|---|---|---|---|

| Identification | Bus/Device/Function | NodeId / FabricId | NVMe Qualified Name (NQN) |

| Data transport | PCIe TLPs | PCIe TLPs | RDMA |

| Queueing | Host Memory | Host Memory | Message-based |

| Latency | Local NVMe | Local NVMe + 700 nanoseconds | Local NVMe + 10 microseconds |

| NVMe driver | Standard NVMe driver | Standard NVMe driver | Special NVMe over Fabrics software |

| Transport technology | Standard PCIe chipsets | Standard PCIe chipset | Infiniband, RoCE, iWARP, Fiber Channel |

| Scalability | Single host | 1-8 hosts* |

*Support for more hosts on demand, please contact Dolphin.

| Feature | NVMe over PCIe Fabrics |

|---|---|

| Ease of use | No modifications to Linux or device drivers. Works with all PCIe based storage solutions. |

| Reliability | Self-throttling, guaranteed delivery at hardware level, no dropped frames or packets due to congestion. Support for hot plugging of cables, full error containment and transparent recovery. |

| NVMe Optimized Client | Send and receive native NVMe commands directly to and from the fabric. |

| Low Latency Fabric | Optimized for low latency. Latency as low as 540 ns data transfers. |

| Direct memory access | Supports direct memory region access for applications. |

| Fabric scaling | Scales to 100s of devices or more. |

| Multi-port support | Multiple ports for simultaneous communication. |

Performance

The NVMe over PCIe Fabric solution does not include any software abstraction layers and the remote system and the NVMe device are able to communicate without using CPU or memory resources in the system where the NVMe is physically located.

The dominating part of the latency for other NVMe over Fabrics solution originates from the software emulation layers and are around 10 microseconds end to end. With the NVMe over PCIe Fabric solution, there is no software emulation layer and adds no more than around 700 nanoseconds (depending on the computer and IO system). This latency originates from crossing a few PCIe bridges in the PCIe fabric versus a software emulation layer.

The NVMe over PCIe fabric supports device DMA engines. These engines will typically move data between the device and memory in the system borrowing the device. No network specific RDMA is needed.

Resilience

The NVMe over PCIe fabric is resilient to errors. The eXpressWare driver implements full error containment and will ensure that no user data is lost due to transient errors. PCIe NTB managed cables can be hot plugged, and the remote NVMe will only be unavailable while the cable is disconnected.

Availability

The SmartIO Device Lending software is included with the eXpressWare 5.5.0 release and newer for Linux. The solution is available with all Dolphins NTB enabled Host cards. Intel and AMD x64 servers are supported. The solution is also available for some ARM systems, please contact Dolphin for details.

SISCI SmartIO with NVMe

Dolphin has developed a software user space API (SISCI SmartIO) to enable concurrent sharing of single function devices between nodes connected to a PCIe fabric. As a test case of the new SmartIO API, we have implemented an user space NVMe driver that allows a single-function SSD to be shared concurrently among multiple nodes over a PCIe fabric. More information on SISCI SmartIO.